I recently analyzed interview transcripts from a nonprofit’s past crises using AI. The executive team thought they were describing five separate problems—a grant compliance issue, a financial reporting delay, a program launch that missed deadlines, an audit finding, and a donor communication breakdown. The AI flagged something else entirely: every single incident involved the same communication gap between finance and program staff. Same pattern. Same delay. Same departments.

Nobody in the organization called it a “communication risk.” They just saw isolated fires they kept putting out.

Why Humans Miss What AI Catches

When you’re inside an organization managing crises as they happen, you focus on the specific incident. The grant deadline you missed. The audit finding you’re addressing. The donor who needed an explanation. You don’t see the meta-pattern. AI doesn’t have that limitation. It analyzes transcripts without the emotional weight of each crisis. It spots recurring phrases, identifies timing patterns, and maps communication flows across incidents that happened months or years apart. In that nonprofit’s case, the pattern was clear: finance discovered problems an average of 3-4 weeks after program staff knew something was wrong. Every time. The specific crises changed, but that communication lag stayed constant.

That’s not five risks. That’s one systemic vulnerability showing up five different ways.

The Predictive Power of Organizational DNA

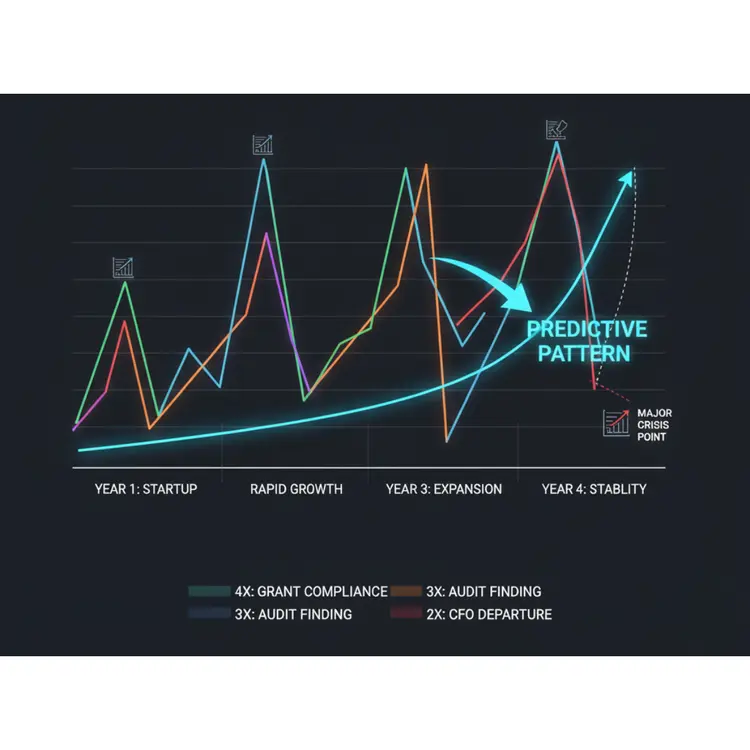

Here’s where AI-powered risk identification gets interesting. Once I map a nonprofit’s risk patterns, I search for comparator organizations—similar budget size, staffing model, growth trajectory, and mission focus. The data reveals what’s coming. A $3M nonprofit at year two of rapid growth with a three-person finance team? I can show them what happened to five similar organizations at year three. Four of them hit major grant compliance issues. Three faced audit findings. All of them experienced the same staffing crisis when their CFO left without documented processes.

It’s not prediction. It’s pattern recognition across organizational DNA. You’re on a trajectory other organizations have already traveled. They hit predictable obstacles at predictable stages. The risks aren’t random—they’re structural.

Breaking Through “We’re Different”

The most common resistance I encounter: “But we’re different.” They point to their strong board oversight. Their experienced executive team. Their better technology systems. They acknowledge the data, but they believe their specific circumstances create immunity. What they don’t realize is that confidence itself is part of the pattern. I validated one executive’s strength in board oversight, then asked: “Given that strength, what would it look like if you proactively used it to prepare for this risk now, rather than waiting to see if it happens?”The reframe shifted everything. Not “you’re too good for this to happen” but “you’re good enough to prepare before it happens.”

The organizations in the comparison data weren’t weak. They were competent nonprofits that didn’t anticipate specific vulnerabilities.

From Hidden Risks to Proactive Preparation

AI reveals three types of hidden risks traditional assessment misses:

Pattern risks: Issues that look like separate incidents but share root causes. The communication gaps. The documentation failures. The single points of failure.

Trajectory risks: Challenges that emerge at predictable organizational stages based on comparator data. The compliance issues that hit during rapid growth. The staffing crises that follow key departures.

Confidence risks: The belief that your strengths make you immune to patterns that affected similar organizations. Organizational exceptionalism that prevents preparation.

When I present this analysis to nonprofit leaders, the conversation shifts. They stop asking “what might go wrong?” and start asking “what do we need to strengthen now?” That’s the difference between reactive risk management and proactive organizational resilience.

What AI Can’t Replace

AI spots patterns. It identifies comparators. It flags hidden risks. But it can’t have the conversation that follows.

When a CEO sees that their organization’s communication breakdown mirrors five other nonprofits’ pre-crisis patterns, they need human expertise to interpret what that means for their specific context. When data shows trajectory risks ahead, they need strategic guidance on which preparations matter most.

AI is the diagnostic tool. Human expertise is the treatment plan.

I use AI to analyze what happened and predict what’s coming. Then I work with leadership teams to build the communication systems, document the critical processes, and practice the crisis scenarios that transform their risk profile before the next crisis hits.

Because the next crisis isn’t random. It’s already visible in the patterns—you just need the right tools to see it.